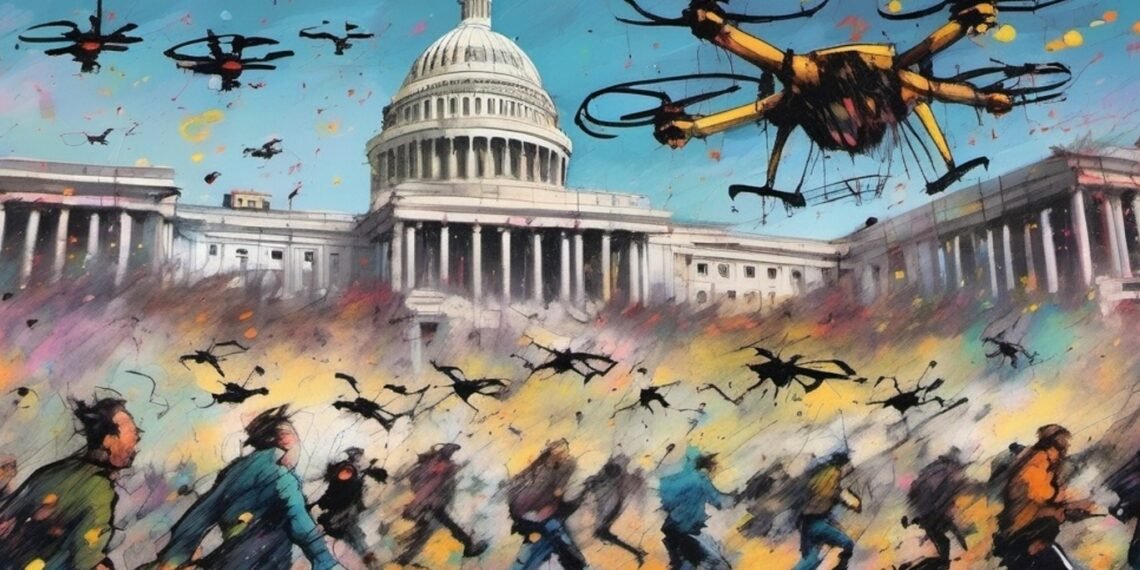

Researchers are applying artificial intelligence (AI) to lethal aircraft, raising questions about diminished human control in modern combat. Instead of soldiers piloting individual unmanned vehicles, air and seaborne drone swarms may be deployed for missions with limited human control. This potential change in warfare raises ethical dilemmas about regulating AI. The Pentagon’s challenges include balancing technological advances with ethical standards. The development, deployment, and use of AI raise serious ethical concerns worldwide. The proliferation of drones in conflicts has initiated efforts to perfect autonomous vehicle networks that plan and work together. In addition to heightened concerns about reduced human control in combat, there are growing fears that adversaries may exploit weaknesses in the swarm technology, itself, and the more so if without built-in ethical constraints.

Current unmanned military drones and drone light shows are not intelligent swarms. Instead, swarms communicate and collaborate attacks using an array of sensors and AI. The challenges of current drone operations include jamming technology, observational difficulties in the fog of war, and energy constraints for longer range drones. Researchers are making progress in these areas despite the challenges. The speed and complexity of drone decision-making pose ethical challenges for maintaining human oversight. AI swarm technology will continue to develop rapidly, changing the nature of warfare. There are varied opinions about the potential of swarm networks in modern warfare. While some see it as a revolutionary step, others see it as an evolution with more limited battlefield applications.

The ainewsarticles.com article you just read is a brief synopsis; the original article can be found here: Read the Full Article…