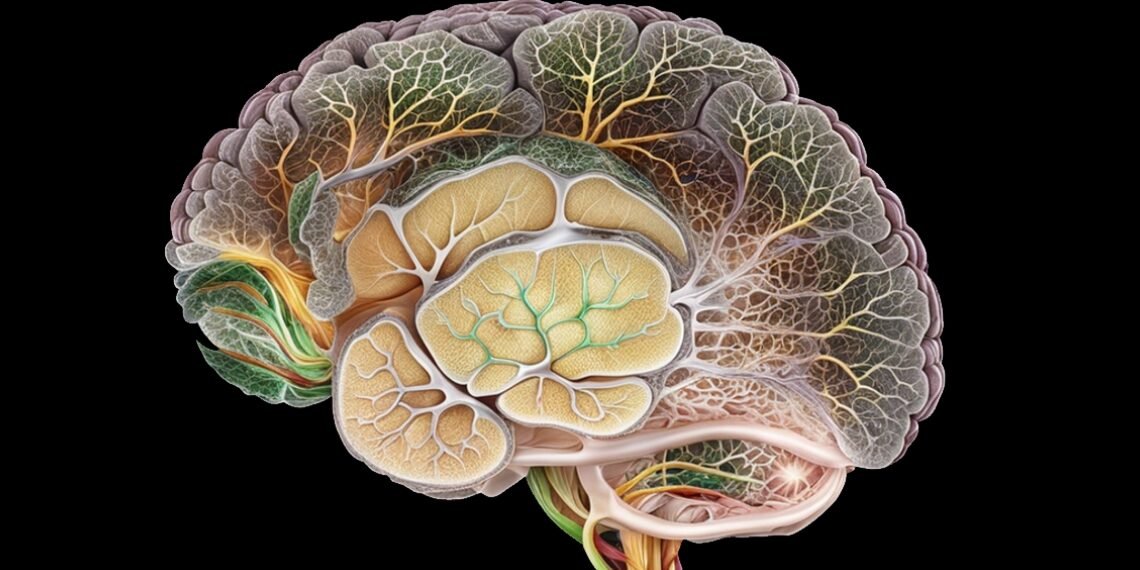

Most artificially intelligent systems are based on neural networks, algorithms inspired by biological neurons found in the brain. These networks can consist of multiple layers, visualized as inputs coming in one side and outputs going out of the other. The outputs can be used to make automatic decisions, for example, those judgements that are necessary to safely pilot a driverless car. Attacks to mislead a neural network can involve exploiting vulnerabilities in any of the input layers; but, typically only the initial input layer is considered when engineering a defense against those attacks. Now for the first time, researchers have enhanced a neural network’s inner layers with a process involving random noise to improve both its resilience and its resistance to external attacks.

Artificial intelligence (AI) has become widespread; chances are you have a smartphone with an AI assistant or use a search engine powered by AI. While ‘AI’ is a broad term that can include many different ways to process information and make decisions, AI systems are often built using artificial neural networks (ANN) similar to those of the brain. And like the brain, ANNs can get confused– intentionally or unintentionally. For instance, ANNs interpret visual input differently than humans, so jumbling that input can result in explicit mistakes with serious consequences, such as misguiding a driverless car into an accident. Defending against attacks upon ANN systems is challenging because it’s not always clear why a mistake occurred. Furthermore, mistakes could be evidence of assaults by AI attackers, trying to alter an input pattern to mislead an ANN system and cause it to behave incorrectly. It is known that adding noise to the first layer of an artificial neural network can improve its defense against such attacks by allowing greater adaptation to different inputs; but, the method is not always effective.

However, researchers from the University of Tokyo Graduate School of Medicine have developed the promising new defense strategy of adding noise to the inner layers of a neural network, rather than only to the outer input layer. Guided by their previous serendipitous studies of the human brain, AI researchers Jumpei Ukita and Professor Kenichi Ohki looked beyond the input layer and added noise to deeper layers of the neural network, which is the analog of a phenomenon they knew about from having studied the human brain. It was a technique that was both unapplied and discounted by ANN scientists. However, contrary to expectations, adding noise to the deeper ANN layers had the dual effect of increasing the network’s adaptability and significantly reducing its vulnerability to simulated attacks!

As a test, the two researchers devised a series of hypothetical attacks that went beyond the input layer by presenting misleading information to the deeper layers of the ANN. They called these attacks “feature-space adversarial examples.” To defend against these attacks, random noise was injected into the deeper hidden layers of the network; and, surprisingly that well-directed noise resulted in a significant enhancement of both the ANN’s adaptability and its defensive capabilities.

While this misinformation defense mechanism, which is analogous to that of the human brain’s, worked and was unusually robust, the team will not stop there; but, intends to further develop and strengthen their ANN defense algorithm’s effectiveness against both known and emerging AI deficiencies and attack strategies.

The ainewsarticles.com article you just read is a brief synopsis; the original article can be found here: Read the Full Article…